Recently, the IoT Smart Robot Team from the School of Automation and the School of Artificial Intelligence at Nanjing University of Posts and Telecommunications, led by Professors Baojie Fan and Fengyu Xu along with Master's student Xiaotian Li, have had their research paper accepted at the prestigious IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2024). Their paper, GAFusion: Adaptive Fusing LiDAR and Camera with Multiple Guidance for 3D Object Detection, introduces an innovative method for multimodal 3D object detection, particularly in Bird's Eye View (BEV) applications.

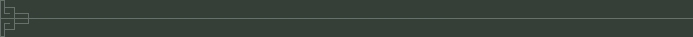

The team's GAFusion method generates rich depth-informed 3D features through Sparse Depth Guidance (SDG) and LiDAR Occupancy Guidance (LOG). It uses a LiDAR Guided Adaptive Fusion Transformer (LGAFT) to enhance the interaction between different modal BEV features from a global perspective. Moreover, they developed a BEV grid to accommodate multi-scale downsampled features and a Multi-Scale Dual Path Transformer (MSDPT), which significantly improves detection performance by expanding the receptive fields across different modal features. This advancement not only significantly boosts safety in autonomous driving but also provides new insights for future research in multimodal 3D object detection. The research was supported by key projects under the National Natural Science Foundation and other national grants.

The GAFusion algorithm's framework.

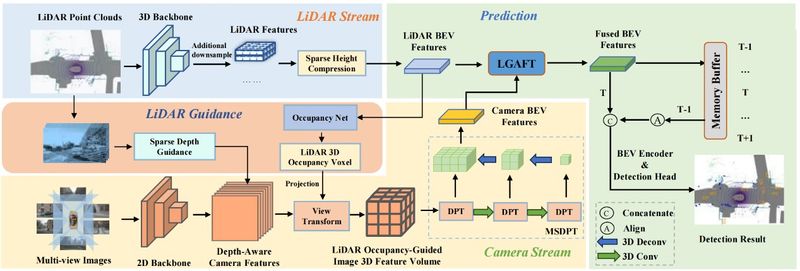

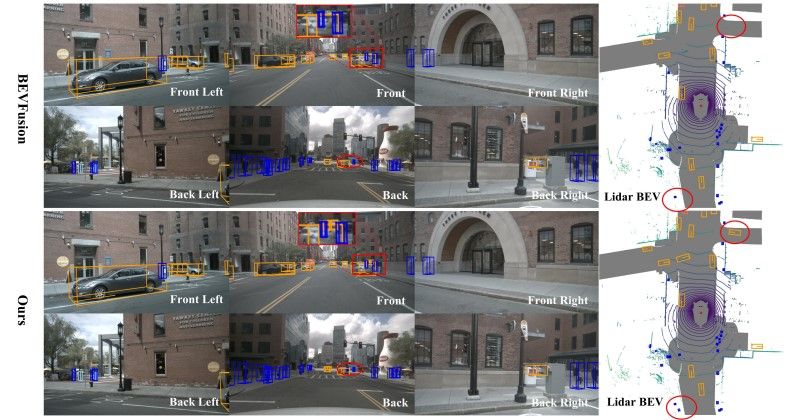

GAFusion visualization results.

CVPR, formally known as the IEEE/CVF Conference on Computer Vision and Pattern Recognition, ranks as one of the top international academic conferences in the fields of artificial intelligence and computer vision (rated as a CCF A-category conference). According to the latest Google Scholar metrics, CVPR is ranked fourth overall, following Nature, NEJM, and Science, and is the number one ranked conference in computer science.

(Written by Baojie Fan, Initially Reviewed by Fengyu Xu, Edited by Cunhong Wang, Reviewed by Feng Zhang)